Monitoring can be Expensive

All about my home LAN server:

My home LAN server is woefully under-powered. It was under-powered when I built it, and I upgrade it as infrequently as I can.

When we moved into our new house in 2010, my new ISP-provided broadband router had a locked-down firmware. This meant that I could not select my DNS provider of choice, if I was to continue using the built-in DHCP & DNS service from that. I felt amply qualified to build my own server, having done so many times in the past ...

One of my first jobs was as Commissioning Engineer for a company called TIS Ltd, who used to import bare-bones Unix servers from Convergent Technologies, and later MIPS, from the USA. I would add hard disk drives and RAM, add multiple serial ports to support VT-100-compliant terminals, load the bad block list manually into the drive firmware, load System-V Unix on them, install Informix database, configure lpd, and soak test every machine before shipment to its end user.

We received PC-ATs from Convergent Technologies too. One of them was always on (it's where we would play PGA Tour Golf and Leisure Suit Larry) so I could test EGA monitors, but I would add hard disks and RAM, and when the first telnet applications were introduced for DOS, I would install those along with 3Com 3C501 network cards, add Thick Ethernet cards to the Unix servers, run lengths of fat yellow Ethernet cable between the two, and tap into the cable to network them together.

Over the years, I built various machines, as a hobby after progressing my career to software, and so in 2010 I had built enough machines that I wasn't scared of it.

My requirements for a home LAN server were:

It had to be very small, because I was in a house rather than a data centre.

It had to be very quiet, because the server would be installed in my dining area.

It had to use very little power, because it would be running 24/7.

It had to serve DNS & DHCP, so I could use whatever public service I wanted.

It had to serve files over the LAN, so I could have a central backup of all my data.

So I looked around for components. I came across a Mini-ITX case with 4 hot-swap bays. I looked around for the best low-power ITX motherboard, and found that extreme low-power motherboards had embedded CPUs on them, so I chose the ASRock A330ION as it had 4 SATA ports and could run passively. The ION CPU was particularly weak, but this was for a server, so it didn't need fancy graphics - I installed Fedora without a window manager.

Now time to see whether there was any NAS software that I wanted to use. I looked at FreeNAS and similar, but Amahi was software that would run on a CPU of that capability, on Fedora, provide DNS & DHCP, had a burgeoning app store with pre-configured applications, could share over SMB, NFS and AFP, and looked all-round like the appropriate server software for me.

That was a long aside! So, my server currently uses a 120GB SSD for boot drive, and 3 x 4TB Seagate Barracuda HDDs in RAID-5.

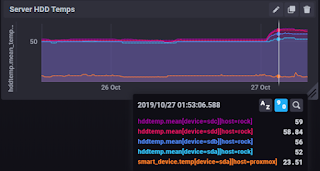

I was tidying my home visualisation panels last week, and realised that I wasn't getting all the temperatures from sensors in that server, so I configured some Telegraf inputs, such as hddtemp, smartctl, sensors, sysctl, temp, etc and created a couple of cells in Chronograf

Whoa! What's happening here? Look at those HDD temperatures! The operating range of a SATA HDD is 0°C - 60°C, so my storage is as hot as it could possibly get without being destroyed, and might actually be throttling!

If the HDDs are that hot, how about the motherboard?

Wow! That's no better! It's nearly 90°C! Ok, this server is too hot! I need to do something with some urgency to fix this!

I immediately logged-in to the server and ran "top". The process "mdadm" was using all my CPU. I ran "cat /proc/mdstat", and saw that my RAID-5 array was being checked. It had started just before the increase in temperature, and was only 30% through the check.

All my HDDs were ok and the RAID array wasn't degraded at all, but I can't stomach that level of heat in a server. The server itself just has one case fan in it. It's a 120mm fan that was shipped with the case. The PSU also has a fan in it. It's a FLEX PSU, so it's a 4" fan. There is no other active airflow in the case, which is a problem. The HDDs are at the front of the case, and very little air passes them.

So I've bought 2 x Noctua NF-A12x15 PWM fans. I've also bought an ASRock Fatal1ty AB350 motherboard, an AMD Athlon 200GE CPU, a Noctua NH-L9a CPU cooler, a 128GB M.2 NVMe PCIe SSD and 16GB RAM.

With that specification, I should be able to push a lot more air through the system, actively heat the motherboard, the CPU is far more powerful (by about 20x) but still only draws 35W TDP, it'll be powerful enough to use FreeNAS or XPEnology or any server OS I want to install on it, and it should last me for another 10 years.

I should receive the components over the next few days, and so my weekend's activities are sorted, when I'll be repeatedly installing server OSs to see what suits me best. I would default back to Amahi, but it currently only runs on an obsolete version of Fedora, so if worse comes to worst, I'll install and configure everything myself, which I think would include Debian Buster, Samba, NFS, Pi-Hole, Webmin, Plex, etc, but keep OpenVPN, Nessus, Nginx and other services as LXC containers on my Proxmox server (another ITX machine, in another case of the same type, running a Gigabyte GA-C1037UN-EU motherboard).

Hmm ... here's a thought ... I could look at multiple Proxmox servers. If I'm going to be investigating server OSs already, I should investigate clustering or extending options in Proxmox. Goodbye multiple weekends!

My home LAN server is woefully under-powered. It was under-powered when I built it, and I upgrade it as infrequently as I can.

When we moved into our new house in 2010, my new ISP-provided broadband router had a locked-down firmware. This meant that I could not select my DNS provider of choice, if I was to continue using the built-in DHCP & DNS service from that. I felt amply qualified to build my own server, having done so many times in the past ...

One of my first jobs was as Commissioning Engineer for a company called TIS Ltd, who used to import bare-bones Unix servers from Convergent Technologies, and later MIPS, from the USA. I would add hard disk drives and RAM, add multiple serial ports to support VT-100-compliant terminals, load the bad block list manually into the drive firmware, load System-V Unix on them, install Informix database, configure lpd, and soak test every machine before shipment to its end user.

We received PC-ATs from Convergent Technologies too. One of them was always on (it's where we would play PGA Tour Golf and Leisure Suit Larry) so I could test EGA monitors, but I would add hard disks and RAM, and when the first telnet applications were introduced for DOS, I would install those along with 3Com 3C501 network cards, add Thick Ethernet cards to the Unix servers, run lengths of fat yellow Ethernet cable between the two, and tap into the cable to network them together.

Over the years, I built various machines, as a hobby after progressing my career to software, and so in 2010 I had built enough machines that I wasn't scared of it.

My requirements for a home LAN server were:

It had to be very small, because I was in a house rather than a data centre.

It had to be very quiet, because the server would be installed in my dining area.

It had to use very little power, because it would be running 24/7.

It had to serve DNS & DHCP, so I could use whatever public service I wanted.

It had to serve files over the LAN, so I could have a central backup of all my data.

So I looked around for components. I came across a Mini-ITX case with 4 hot-swap bays. I looked around for the best low-power ITX motherboard, and found that extreme low-power motherboards had embedded CPUs on them, so I chose the ASRock A330ION as it had 4 SATA ports and could run passively. The ION CPU was particularly weak, but this was for a server, so it didn't need fancy graphics - I installed Fedora without a window manager.

Now time to see whether there was any NAS software that I wanted to use. I looked at FreeNAS and similar, but Amahi was software that would run on a CPU of that capability, on Fedora, provide DNS & DHCP, had a burgeoning app store with pre-configured applications, could share over SMB, NFS and AFP, and looked all-round like the appropriate server software for me.

That was a long aside! So, my server currently uses a 120GB SSD for boot drive, and 3 x 4TB Seagate Barracuda HDDs in RAID-5.

I was tidying my home visualisation panels last week, and realised that I wasn't getting all the temperatures from sensors in that server, so I configured some Telegraf inputs, such as hddtemp, smartctl, sensors, sysctl, temp, etc and created a couple of cells in Chronograf

Whoa! What's happening here? Look at those HDD temperatures! The operating range of a SATA HDD is 0°C - 60°C, so my storage is as hot as it could possibly get without being destroyed, and might actually be throttling!

If the HDDs are that hot, how about the motherboard?

Wow! That's no better! It's nearly 90°C! Ok, this server is too hot! I need to do something with some urgency to fix this!

I immediately logged-in to the server and ran "top". The process "mdadm" was using all my CPU. I ran "cat /proc/mdstat", and saw that my RAID-5 array was being checked. It had started just before the increase in temperature, and was only 30% through the check.

All my HDDs were ok and the RAID array wasn't degraded at all, but I can't stomach that level of heat in a server. The server itself just has one case fan in it. It's a 120mm fan that was shipped with the case. The PSU also has a fan in it. It's a FLEX PSU, so it's a 4" fan. There is no other active airflow in the case, which is a problem. The HDDs are at the front of the case, and very little air passes them.

So I've bought 2 x Noctua NF-A12x15 PWM fans. I've also bought an ASRock Fatal1ty AB350 motherboard, an AMD Athlon 200GE CPU, a Noctua NH-L9a CPU cooler, a 128GB M.2 NVMe PCIe SSD and 16GB RAM.

With that specification, I should be able to push a lot more air through the system, actively heat the motherboard, the CPU is far more powerful (by about 20x) but still only draws 35W TDP, it'll be powerful enough to use FreeNAS or XPEnology or any server OS I want to install on it, and it should last me for another 10 years.

I should receive the components over the next few days, and so my weekend's activities are sorted, when I'll be repeatedly installing server OSs to see what suits me best. I would default back to Amahi, but it currently only runs on an obsolete version of Fedora, so if worse comes to worst, I'll install and configure everything myself, which I think would include Debian Buster, Samba, NFS, Pi-Hole, Webmin, Plex, etc, but keep OpenVPN, Nessus, Nginx and other services as LXC containers on my Proxmox server (another ITX machine, in another case of the same type, running a Gigabyte GA-C1037UN-EU motherboard).

Hmm ... here's a thought ... I could look at multiple Proxmox servers. If I'm going to be investigating server OSs already, I should investigate clustering or extending options in Proxmox. Goodbye multiple weekends!

Comments

Post a Comment